RECKONING: Reasoning through Dynamic Knowledge Encoding: Generalization to Real-World knowledge

Table of Links

Abstract and 1. Introduction

-

Background

-

Method

-

Experiments

4.1 Multi-hop Reasoning Performance

4.2 Reasoning with Distractors

4.3 Generalization to Real-World knowledge

4.4 Run-time Analysis

4.5 Memorizing Knowledge

-

Related Work

-

Conclusion, Acknowledgements, and References

\ A. Dataset

B. In-context Reasoning with Distractors

C. Implementation Details

D. Adaptive Learning Rate

E. Experiments with Large Language Models

4.3 Generalization to Real-World knowledge

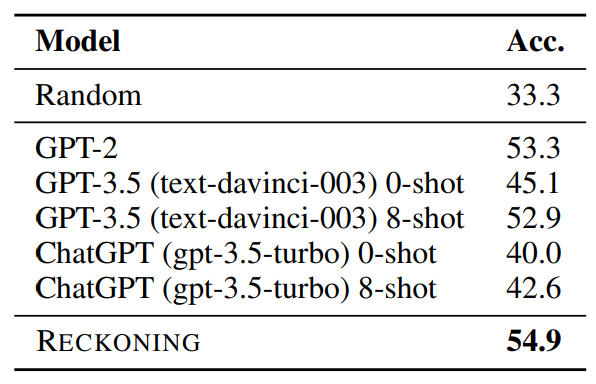

To investigate how generalizable our method is to real-world knowledge beyond the synthetic setting, we evaluate RECKONING on a more real-world multi-hop logical reasoning task, FOLIO [29], and report the result in Table 2. The dataset has a rich vocabulary, diverse logic patterns, and abundant language variations. It has been shown to challenge LLMs in both supervised fine-tuning and in-context learning settings. We fine-tune the GPT-2 model following the in-context reasoning setting as the baseline. As before, we train the GPT-2 model and RECKONING using the multi-task objective. We also compare to more advanced baselines, including GPT-3.5 (text-davinci-003 [55]) and ChatGPT(gpt-3.5-turbo[2]), two popular large language models with around 175B parameters. For these two large models, we evaluate both in the zero-shot and few-shot settings. In the few-shot setting, we prompt the model with 8 single-task examples randomly sampled from the training set to perform in-context learning. We find that RECKONING’s performance (which is initiated here from GPT-2) is better than the GPT-2 in-context reasoning baseline. Compared to the two advanced large language models, RECKONING outperforms them by a significant margin (12% 0-shot and 7% 8-shot). We conclude that RECKONING is effective and significantly benefits reasoning tasks using real-world knowledge.

\

\

:::info Authors:

(1) Zeming Chen, EPFL ([email protected]);

(2) Gail Weiss, EPFL ([email protected]);

(3) Eric Mitchell, Stanford University ([email protected])';

(4) Asli Celikyilmaz, Meta AI Research ([email protected]);

(5) Antoine Bosselut, EPFL ([email protected]).

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

2 https://openai.com/blog/chatgpt

You May Also Like

Ripple Seals $1.25B Deal to Acquire Hidden Road — Big Move in Crypto

Cashing In On University Patents Means Giving Up On Our Innovation Future